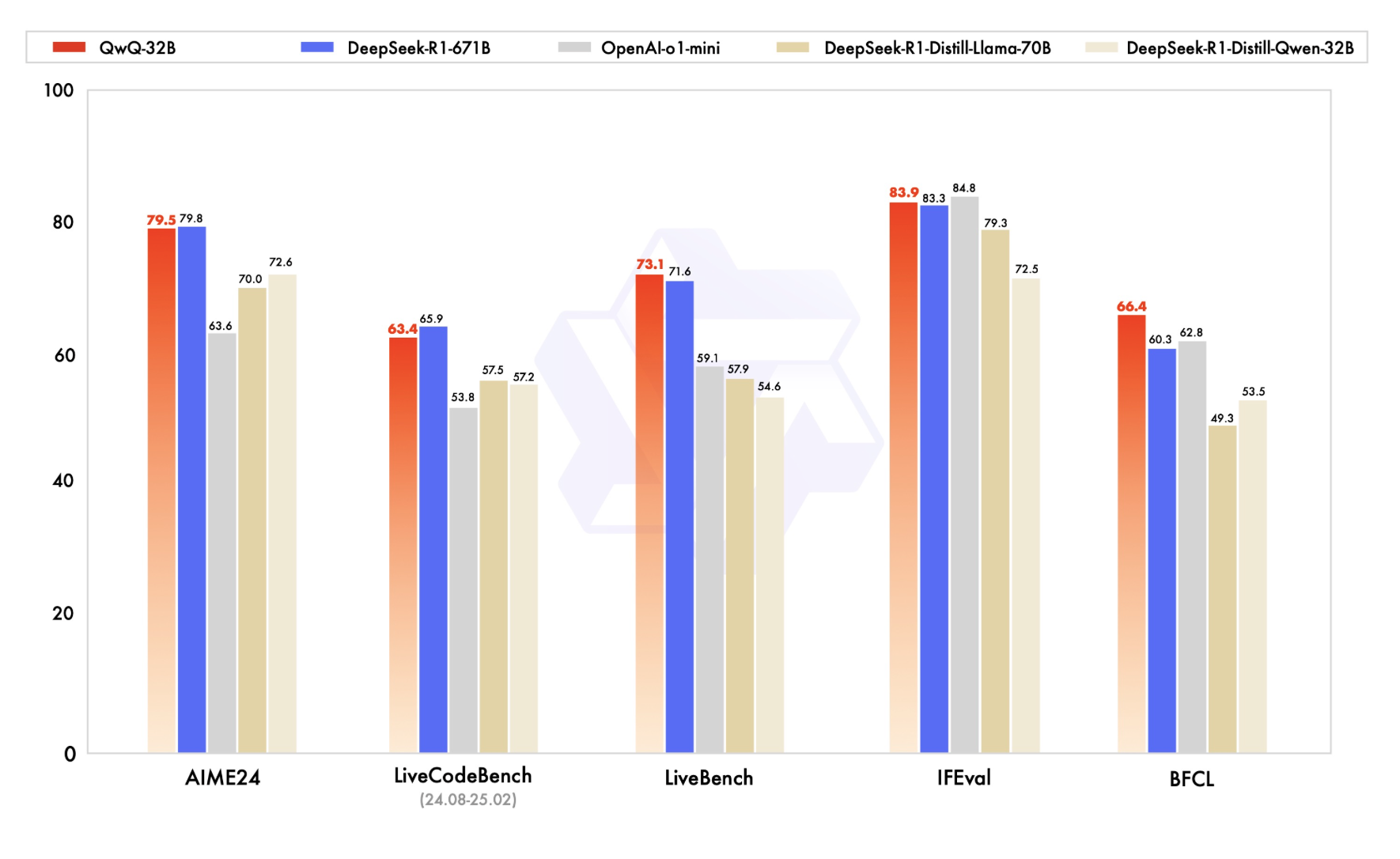

The AI landscape is shifting again. QWQ-32B from Qwen has just thrown down the gauntlet, demonstrating performance gains that not only surpass distilled DeepSeek R1 32B models but also challenge DeepSeek-R 1-671B on key benchmarks. This isn't just incremental progress; we're witnessing a leap.

My initial experience with QWQ has been overtly positive. The speed improvements are tangible, and the subjective performance boost is undeniable. For those leveraging Ollama, it's readily available for immediate testing.

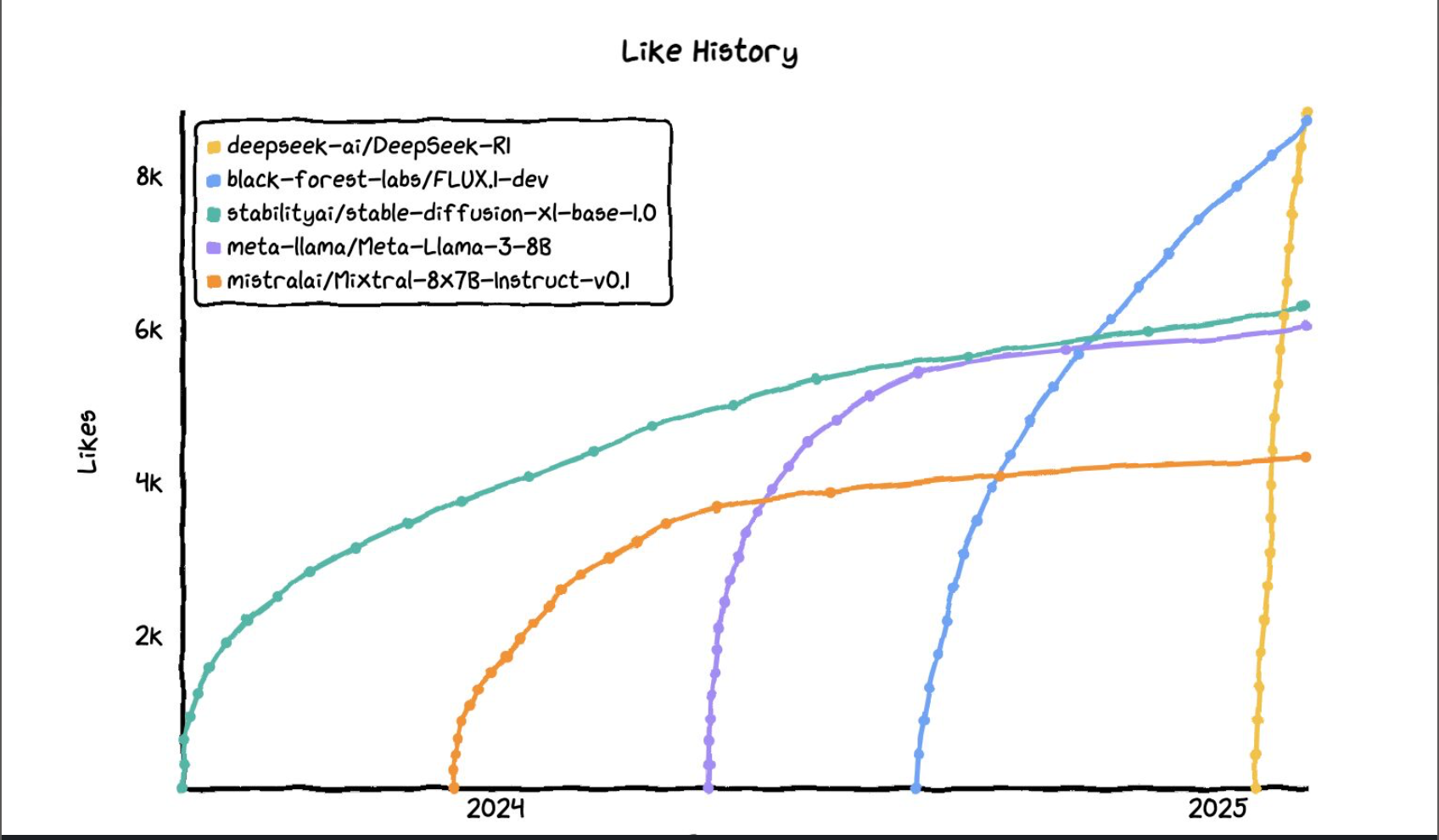

The rapid adoption of new models as illustrated by Clem's recent data on model popularity (likes as a proxy), underscores a critical trend: the near-instantaneous embrace of superior models. DeepSeek's previous trajectory was a testament to this, but will QWQ accelerate the pace? I suspect so.

What does this mean for the future? We're still witnessing significant improvements, often step changes in models, whether it be efficiency, performance or size. To take full advantage of rapid innovation, organisations need to keep a strong focus on using adaptable, flexible, testable and agnostic ML frameworks, particularly those that empower LLMs and agentic deployments. The ability to rapidly integrate and leverage these advancements is becoming paramount.

A week in AI feels like a year in just about any other field of technology. I'm excited about what next week will bring.